SweoIG/TaskForces/CommunityProjects/LinkingOpenData

News

- 2017-12-03: The 10th edition of the Linked Data on the Web workshop will take place at WWW2017 in Perth, AUstralia. The paper submission deadline for the workshop is 29 January 2017.

- 2017-20-02: An updated version of the LOD Cloud diagram has been published. The new version contains 1,146 linked datasets which are connected by RDF links.

- 2016-11-02: The 9th edition of the Linked Data on the Web workshop will take place at WWW2016 in Montreal, Canada. The paper submission deadline for the workshop is 24 January 2016.

- 2015-05-19: The Linked Data on the Web (LDOW2015) workshop took place at WWW2015 in Florence, Italy. The proceedings of the workshop at found here.

- 2014-09-10: An updated version of the LOD Cloud diagram has been published. The new version contains 570 linked datasets which are connected by 2909 linksets. New statistics about the adoption of the Linked Data best practices are found in an updated version of the State of the LOD Cloud document.

- 2014-04-26: The 7th edition of the Linked Data on the Web workshop took place at WWW2014 in Seoul, Korea. The workshop was attended by around 80 people. The proceedings of the workshop are published as CEUR-WS Vol-1184.

- 2013-04-25: The accepted papers of the 6th Linked Data on the Web Workshop (LDOW2013) are online now. LDOW2013 will take place at WWW2013 in May 2013, Rio de Janeiro, Brazil.

- 2013-04-25: The Ordnance Survey, Great Britain's national mapping agency, has launched its new Linked Data service.

- 2012-03-25: The accepted papers of the 5th Linked Data on the Web Workshop (LDOW2012) are online now. LDOW2012 will take place at WWW2012 in April 2012, Lyon, France. Beside of the paper presentations, there will be a panel discussion at the workshop about the deployment of Linked Data in different application domains and the motivation, value proposition and business models behind these deployments, especially in relation to complementary and alternative techniques for data provision (e.g. Web APIs, Microdata, Microformats) and proprietary data sharing platforms (e.g. Facebook, Twitter, Flickr, LastFM).

- 2012-03-08: OpenLink Software's LOD Cloud Cache increased to 52-Billion+ Triples! Recent stats visible in Google Docs Spreadsheet, populated directly via SPARQL queries.

- 2012-02-19: Nice Linked Open Data promotional video released by Europeana project.

- 2012-02-03: LODStats released. LODStats constantly monitors the Linked Data cloud and calculates statistics about the content of the data sets, their accessability as well as the usage of different vocabularies. LODStats complements the meta-information about LOD data sets provided by CKAN and LOV.

- 2012-01-19: New free eBook Linked Open Data: The Essentials - A Quick Start Guide for Decision Makers published.

- 2011-11-10: The W3C has launched a community directory of Linked Data projects and suppliers in the domain of eGovernment.

- 2011-10-12: Facebook has started to support RDF and Linked Data URIs and now provides access to parts of its user data via a Linked Data API. For details, see these posts (1, 2) by Jesse Weaver.

- 2011-09-19: Updated version of the LOD Cloud Diagram and State of the LOD Cloud statistics published. Thanks a lot to everybody who contributed to the creation of the diagram by providing meta-information about the data sets on the Data Hub. Altogether, the data sets in the LOD cloud currently consist of over 31 billion RDF triples and are interlinked by around 504 million RDF links.

- 2011-06-02: Google, Yahoo and Microsoft have agreed on vocabularies for publishing strucutred data on the Web. Their shared 'ontology' is maintained on schema.org. The Linked Data community congratulates to this important step forward towards making Web content more strucuted and thus allowing applications to do smarter things with it!

- 2011-03-29: The 4th Linked Data on the Web Workshop (LDOW2011) took place at WWW2011 in Hyderabad, India. The workshop was attended by around 70 people and we had lots of interesting discussions. The papers and presentation slides are available from the workshop website. Photos from the workshop are found on flickr.

- 2011-02-17: New book published: Linked Data - Evolving the Web into a Global Data Space (Tom Heath, Chris Bizer).

- 2010-12-07: Linked Open Data star badges - express your support for LOD data according to TimBL's 5-star plan.

- 2010-11-05: Linked Data Specifications - provides a comprehensive and up-to-date listing of the relevant Linked Data specs.

- 2010-10-28: Enable Cross-Origin Resource Sharing (CORS)! An advocacy site for overcoming the same-origin policy (enables browser/Ajax access for Linked Data sources).

- 2010-10-20: Linked Enterprise Data book released. The book records some of the earliest production applications of linking en- terprise data and is freely available as HTML in addition to being published by Springer.

- 2010-09-24: New version of the LOD Cloud diagram released Over the last weeks, vrious members of the LOD community have collected detailed meta-data about linked datasets on CKAN. This data was used to draw a new September 2010 version of the LOD diagram. The new diagram contains 203 linked datasets which together serve 25 billion RDF triples to the Web and are interconnected by 395 million RDF links. State of the LOD Cloud provides further statistics about the datasets in the cloud.

- 2010-05-28: Newsweek is now using RDFa, Dublin Core, FOAF and SIOC to annotate the articles on their website.

- 2010-05-28: The W3C has started a Library Linked Data Incubator Group in order to help increase global interoperability of library data on the Web.

- 2010-05-14: The US government portal Data.gov makes around 400 of its datasets available as Linked Data. Altogether the datasets sum up to 6.4 billion triples.

- 2010-04-27: Two Linked Data events took place at the World Wide Web conference in Raleigh: 3rd Linked Data on the Web workshop and W3C LOD Camp.

- 2010-04-19: The German National Library (DNB) has published its person data (PND dataset describing 1.8 million people) and its subject headings (SWD; 164,000 headings) as Linked Data on the Web. More details, Paper about their vision and experience with Linked Data.

- 2010-04-12: The Hungarian National Library has published its entire OPAC and Digital Library as Linked Data. More details ...

- 2010-01-21: BBC News - Tim Berners-Lee unveils government data project. The website for the project is at data.gov.uk.

- 2010-01-12: A Japanese translation of this page is available here. Thanks a lot to Noboru Shimizu and Shuji Takashima for translating the page and for promoting Linked Data in Japan. An ongoing (traditional) chinese translation is also now(2/10) avaiable. More transltions are welcome!

Project Description

The Open Data Movement aims at making data freely available to everyone. There are already various interesting open data sets available on the Web. Examples include Wikipedia, Wikibooks, Geonames, MusicBrainz, WordNet, the DBLP bibliography and many more which are published under Creative Commons or Talis licenses.

The goal of the W3C SWEO Linking Open Data community project is to extend the Web with a data commons by publishing various open data sets as RDF on the Web and by setting RDF links between data items from different data sources.

RDF links enable you to navigate from a data item within one data source to related data items within other sources using a Semantic Web browser. RDF links can also be followed by the crawlers of Semantic Web search engines, which may provide sophisticated search and query capabilities over crawled data. As query results are structured data and not just links to HTML pages, they can be used within other applications.

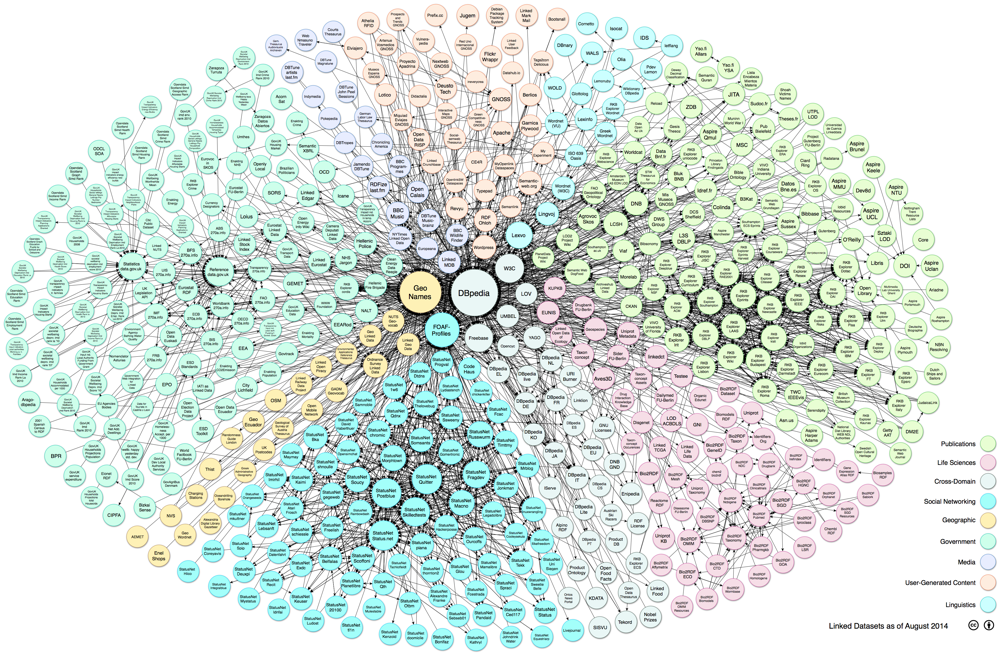

The figures below show the data sets that have been published and interlinked by the project so far. Collectively, the 570 data sets are connected by 2909 link sets.

Clickable version of this diagram. SVG version of this diagram. PDF version of this diagram. White version of this diagram (SVG, PDF). Older versions of the diagram. The raw data that was used to draw the diagram is maintained by the LOD community on the DataHub and can be accessed via the CKAN API.

The figure below, offered by the UMBEL project, shows (some of) the class-level interlinking of the data dictionaries (shared vocabularies, schemas, ontologies) associated with the data sets shown above. Click the image for a node-clickable version. More information about class-level/vocabulary-level interlinking is provided by the Linked Open Vocabularies (LOV) project.

Project Pages

The project collects relevant material on several wiki pages. Please feel free to add additional material, so that we get an overview about what is already there and what is currently happening.

- Data Sets

- RDFizers and ConverterToRdf

- Publishing Tools

- Semantic Web Browsers and Client Libraries

- Semantic Web Search Engines

- Applications

- Discovery and Usage (voiD)

- Equivalence Mining and Matching Frameworks

- Common Vocabularies / Ontologies / Micromodels

- Data Licensing

- Tools for annotating free-text with URIs

- THALIA testbed

- OLTP Benchmarks for Triplestores

- Broken Links in LOD

- Linking Open Drug Data

- [DataSets]

- [RDF Dumps]

- [SPARQL endpoints]

- [Link Statistics]

Meetings & Gatherings

LOD Community Gatherings

- Rio de Janeiro (WWW2013) Gathering (type: Face-Face Meeting)

- Hyderabad (WWW2011) Gathering (type: Face-Face Meeting)

- Semtech 2010 (San Francisco) (type: Face-Face Meeting)

- Heraklion (ESWC 2010) Gathering (type: Face-Face Meeting)

- Raleigh (WWW2010) Gathering (type: Face-Face Meeting)

- 2nd Linked Data Meetup London (type: Face-Face Meeting, Feb 2010)

- Virgina (ISWC09) Gathering (type: Face-Face Meeting)

- 1st Linked Data Meetup London (type: Face-Face Meeting, Sep 2009)

- Heraklion (ESWC09) Gathering (type: Face-Face Meeting)

- BOF on a Research Agenda For Linked Data at WWW2009 (type: Face-Face Meeting)

- Madrid (WWW2009) Gathering (type: Face-Face Meeting)

- Karlsruhe (ISWC2008) Gathering (type: Face-Face Meeting)

- Tenerife (ESWC08) Gathering (type: Face-Face Meeting)

- BOF on Semantic Web Search Engines at WWW2008 (type: Face-Face Meeting)

- Beijing (WWW2008) Gathering (type: Face-Face Meeting/Birthday Celebration)

- Busan (ISWC2007) Gathering (type: Face-Face Meeting)

- London (August 2007) Gathering (type: Face-Face Meeting)

- Innsbruck (ESWC2007) Gathering (type: Face-Face Meeting)

- Banff (WWW2007) Gathering (type: Face-Face Meeting)

Linked Data Conferences, Workshops and Tutorials

- 5rd International Workshop on Consuming Linked Data (COLD 2014) at ISWC 2014 (October 2014, Riva del Garda, Italy)

- Linked Data on the Web Workshop (LDOW2014) at WWW2014 (April 2014, Seoul, Korea)

- 4rd International Workshop on Consuming Linked Data (COLD 2013) at ISWC 2013 (October 2013, Sydney, Australia)

- Linked Data on the Web Workshop (LDOW2013) at WWW2013 (May 2013, Rio de Janeiro, Brazil)

- 3rd International Workshop on Consuming Linked Data (COLD 2012) at ISWC 2012 (November 2012, Boston, USA)

- Linked Data on the Web Workshop (LDOW2012) at WWW2012 (April 2012, Lyon, France)

- 2nd International Workshop on Consuming Linked Data (COLD 2011) at ISWC 2011 (October 2011, Bonn, Germany)

- Linked Data on the Web Workshop (LDOW2011) at WWW2011 (March 2011, Hyderabad, India)

- Linked Data in the Future Internet session at the Future Internet Assembly (Ghent 16/17 Dec 2010)

- 1st International Workshop on Consuming Linked Data (COLD 2010) at ISWC 2010 (November 2010, Shanghai, China)

- 3rd KRDB school on Trends in the Web of Data (KRDBs-2010) (September 2010, Bozen-Bolzano, Italy)

- Linked Data on the Web Workshop (LDOW2010) at WWW2010 (April 2010, Raleigh, North Carolina, USA)

- 13th International Workshop on the Web and Databases (WebDB2010), June 2010, Indianapolis, U.S.A.

- Linked AI: Linked Data Meets Artificial Intelligence at AAAI2009 (March 2010, Stanford, USA)

- Linked Data on the Web Workshop (LDOW2009) at WWW2009 (April 2009, Madrid, Spain)

- How to Publish Linked Data on the Web tutorial at ISWC2008 (October 2008, Karlsruhe, Germany)

- Data Integration through Semantic Technologies

- New York City (June 2008) Linked Data Planet (type: Conference & Expo) (Unofficial meetup coordination)

- Linked Data on the Web Workshop (LDOW2008) at WWW2008

See Also

Tutorials and Overview Articles

- Christian Bizer, Tom Heath, Tim Berners-Lee: Linked Data - The Story So Far. In: IJSWIS, Vol. 5, Issue 3, Pages 1-22, 2009.

- Tom Heath, Christian Bizer: Linked Data: Evolving the Web into a Global Data Space. Synthesis Lectures on the Semantic Web: Theory and Technology, Morgan & Claypool Publishers, ISBN 978160845431, 2011.

- Florian Bauer, Martin Kaltenböck: Linked Open Data: The Essentials - A Quick Start Guide for Decision Makers, edition mino, ISBN 9783902796059, 2012.

- Christian Bizer et al.: How to publish Linked Data on the Web, 2007.

- Jeni Tennison: Creating Linked Data - Part I-V, 2009.

- Tim Berners-Lee: Putting Government Data online, Web architecture design note, 2009.

- Max Schmachtenberg, Christian Bizer, Heiko Paulheim: State of the LOD Cloud 2014, 2014.

- Joab Jackson: The Web's next act: A worldwide database. Government Computer News article about Linked Data.

- Christian Bizer: The Emerging Web of Linked Data. In: IEEE Intelligent Systems, Vol. 24, No. 5. pp. 87-92, 2009.

- Richard Cyganiak: Debugging Semantic Web sites with cURL - one way to test Semantic Web sites

- Sauermann et al.: Cool URIs for the Semantic Web - URI dereferencing and content-negotiation

- OpenLink Software: Deploying Linked Data using the Virtuoso Universal Server

- OpenLink Software: Generating Linked Data from non RDF Data Sources via the Generating Linked Data from non RDF Data Sources via the Virtuoso Sponger

- Michael Hausenblas: Linked Data Tutorial via slideshare

- Tom Heath, Michael Hausenblas, Chris Bizer, Richard Cyganiak, Olaf Hartig: How to Publish Linked Data on the Web, ISWC08 video

- Sören Auer: Linked Data Tutorial: From the Document Web to a Web of Linked Data

- Knud Möller, Michael Hausenblas, Gunnar AAstrand Grimnes: Learning from Linked Open Data Usage: Patterns & Metrics. Paper analysing the traffic on several Web of Data sites.

- Christian Bizer: Web of Linked Data – A global public dataspace on the Web (Slides). Talk at the WebDB workshop at SIGMOD 2010.

- Michael J. Franklin, Alon Y. Halevy, David Maier: From databases to dataspaces: a new abstraction for information management. SIGMOD Record 34(4): 27-33 (2005). Article introducing dataspaces as a new agenda and traget architecture for the database community.

- Alon Y. Halevy, David Maier: Slides from the 1st Tutorial on Dataspaces (Part 1, Part 2) held at VLDB 2008.

Presentations

- Tim Berners-Lee: The next Web of open, linked data. Video of Tim's talk at TED 2009. Also available: Slides.

- Chris Bizer, Tom Heath, Tim Berners-Lee: Linked Data: Principles and State of the Art talk at the W3C Track at WWW2008.

- Tim Berners-Lee: Browsable Data

- Various presentations from Kingsley Idehen and OpenLink Software

Historical Perspective

- Tim Berners-Lee: Linked Data (architecture note outlining the basic ideas of Linked Data)

- Christian Bizer et al.: Interlinking Open Data on the Web (Two page document giving an overview about the Linking Open Data project)

- Alistair Miles et al.: Best Practice Recipes for Publishing RDF Vocabularies (W3C draft on serving RDF vocabularies according to the Linked Data principles)

- Ding, Finin: Characterizing the Semantic Web on the Web (kind of outdated but still interesting paper on RDF data on the Web)

- Michael K. Bergman: More Structure, More Terminology and (hopefully) More Clarity (contextualization of Linked Data in the general development of the Web)

- NetworkedData (old page on related topic)

- RdfLite ("no bnodes" etc)

- Following your Nose to the Web of Data Draft version of an Information Standards Quarterly article about Linked Data and the LOD project.

- Mike Bergman: Linked Data Comes of Age - LinkedData Planet and LDOW Set the Pace for 2008

- Uche Ogbuji: Real Web 2.0: Linking open data - Discover the community that sees Web 2.0 as a way to revolutionize information on the Web, IBM Developerworks, Feb. 2008.

- Tom Heath: 'No more toy examples' - the Linking Open Data project, one year on. Talis Platform News, Feb. 2008.

Research Projects focusing on Linked Data

- EnAKTing - Forging the Web of Linked Data EPSRC-funded project dedicated to solving fundamental problems in achieving an effective web of linked data.

- LOD2 – Creating Knowledge out of Interlinked Data EU-funded IP project which develops a Linked Data tool stack.

- LATC - Linked Open Data Around the Clock EU-funded SA project that supports the community to publish and consume Linked Data.

Demos

Mailing List

As there are lots of interesting mail conversations around the project, we decided that we need a mailing list.

The list is hosted by W3C and you can subscribe it at [1].

The project's mailing list was hosted by the MIT SIMILE project until Spring 2008; MIT also maintains an archive of the list until that point.

FAQ

1. Please provide a brief description of your proposed project.

The Open Data Movement aims at making data freely available to everyone. There are already various interesting open data sources available on the Web. Examples include Wikipedia, Wikibooks, Geonames, MusicBrainz, WordNet, the DBLP bibliography and many more which are published under Creative Commons or Talis licenses.

The goal of the Linking Open Data project is to build a data commons by making various open data sources available on the Web as RDF and by setting RDF links between data items from different data sources.

RDF links enable you to navigate from a data item within one data source to related data items within other sources using a Semantic Web browser. RDF links can also be followed by the crawlers of Semantic Web search engines, which may provide sophisticated search and query capabilities over crawled data. As query results are structured data and not just links to HTML pages, they can be used within other applications.

There are already some data publishing efforts. Examples include the DBpedia.org project, the Geonames Ontology, the D2R Server publishing the DBLP bibliography and the dbtune music server. There are also initial efforts to interlink these data sources. For instance, the DBpedia RDF descriptions of cities includes owl:sameAs links to the Geonames data about the city (1). Another example is the RDF Book Mashup which links book authors to paper authors within the DBLP bibliography (2).

2. Why did you select this particular project?

For demonstrating the value of the Semantic Web it is essential to have more real-world data online. RDF is also the obvious technology to inter-link data from various sources.

3. Why do you think this project will have a wide impact?

A huge inter-linked data set would be beneficial for various Semantic Web development areas, including Semantic Web browsers and other user interfaces, Semantic Web crawlers, RDF repositories and reasoning engines.

Having a variety of useful data online would encourage people to link to it and could help bootstrapping the Semantic Web as a whole.

4. Can your project be easily integrated with other wide-spread systems? If so, which and how?

The data can instantly be browsed with Semantic Web browsers like Tabulator or Disco or OpenLink RDF Browser or OpenLink Data Explorer. Existing Semantic Web crawlers like SWSE, Swoogle can provide an integrated view on the data and sophisticated search interfaces.

5. Why is it that this project should be done right now, i.e. why should people prioritize this ahead of other projects?

It is getting boring to play around with toy examples as most Semantic Web projects do.

6. What can you contribute to the project?

We will keep on working on DBpedia and start serving RDF for all 1.6 million concepts in Wikipedia in a couple of weeks. As Wikipedia contains information about various domains, we think DBpedia URIs could function as a valuable linking-hub for interconnecting various data sources. We could link to related data from DBpedia as we already did with the links to Geonames.

7. What contribution would you need from others?

- Propose additional open data sources that could be mapped to RDF.

- Convert a data source to RDF and serve it as linked data or SPARQL endpoint on the Web.

- Invent heuristics to auto-generate links between data items from different sources.

8. What standardization should the Semantic Web community at large undertake to support the project?

There is already standardization going on within the SWBP working group: Best Practice Recipes for Publishing RDF Vocabularies. It would also be useful to propagate Tim's linked data ideas.

9. How does your project encourage others not currently involved with Semantic Web technologies to get involved (by providing data or make a coding commitment)?

Having useful data online might initialize network effects. The project could raise awareness within the Open Data community about the benefits that RDF as a shared data model offers them. Having richly inter-linked data online might inspire people to create interesting mashups and other RDF-aware applications.

10. What would be the main benefit of using Semantic Web technologies to achieve the goals of the project, compared to other technologies?

RDF provides a flexible data model for integrating information from different sources. Especially its linking capabilities are not provided by any other data model.

Commitments

If you like this project, please write your name below and indicate what contribution you can make to the project. Possible forms of commitment are:

- I think this project is a good idea and it's realization would be useful.

- I would like to propose further data sources for being published as RDF

- I would like to convert a data source to RDF

- I could serve some data from my server (if somebody would give it to me)

- I would like to work on heuristics to auto-generate links between data items from different sources

- I could talk with other people that might want to contribute to the project

Chris Bizer and Richard Cyganiak proposed the project to the W3C SWEO. We maintain several Linked Data sources, including DBpedia, DBLP Berlin, CIA Factbook, Book Mashup and Eurostat, and do outreach and coordination work for the project.

Sören Auer - I try to contribute with regard to converting, serving data-sources and talking to people ;-)

Bernard Vatant - Already involved in Geonames ontology, and linking Geonames data and concepts to other sources such as INSEE data. Projects to do more, linking to GEMET concepts, Wikipedia categories etc.

Josh Tauberer - 700 million triples of U.S. Census data coming very soon now.... (Just having some free disk space issues loading it into MySQL.) Tying this to GeoNames will be an interesting/useful project for someone looking for a project.

Tom Heath - Great idea. I can contribute the involvement of Revyu.com, (AFAIK) the only RDF-based reviewing and rating site in the wild. The sites exposes data using FOAF, the Review Vocab and Richard Newman's Tag Ontology, and everything gets dereferenceable URIs. The data set is modest, but growing. I'm really interested in developing heuristics to auto-generate sameAs links between URIs from Revyu and elsewhere, and ways to infer locations of things from reviews and tags and hook this into Geonames.

Kingsley Idehen, Orri Erling, and Frederick Giasson - Additional and complementary RDF Data Sources in line with Linked Data principles via projects such as PingTheSemanticWeb, SearchTheSemanticWeb, and OpenLink Data Spaces. In addition we will be making Virtuoso available as an RDF Data Store for scalability experimentation, exploration activities, etc.

Marc Wick - Implementation of Geonames RDF web services

Felix Van de Maele - A very interesting project. I developed an ontology-focused crawler and am currently working on the community-driven ontology matcher and mediator which might be handy to interlink RDF data sources.

Stefano Mazzocchi - As part of the MIT Simile Project, I've been RDFizing large data sets for years (unfortunately, most of these are not data I can make publicly available). We provide a way to export RDF data from all of our RDF browsing tools, but we haven't focused on providing URI dereferencing for such data and I agree that it might be important to start doing so. The juiciest dataset we have to offer is a 50Mt dump of the MIT Libraries catalog covering about a million books. I'm also currently working on an owl:sameAs-based RDF smoosher (which is already functional from the command line) and I'm planning on working on equivalence mining next. Also worth noting how the SIMILE Project has a large collection of RDFizing programs that can be used to generate large quantities of RDF from existing data.

DannyAyers - intend to look at ConverterFromRdf possibilities, linking the output semweb systems to "legacy" data consumers, also wondering about low-cost linkage/heuristics for describing data sets

Ed Summers - I'm a software developer at the Library of Congress interested in making bibliographic and authority data sets available to the semantic web.

Yves Raimond - I am a PhD student in the Centre for Digital Music, Queen Mary, University of London, and I am interested in linking music-related open data (Musicbrainz, Magnatune, Jamendo, Dogmazic, Mutopia, among others...). I am also part of two projects, in which I am trying to promote such an approach: EASAIER (Enabling Access to Sound Archives through Enrichment and Retrieval) and OMRAS2 (Online Music Recognition and Searching).

Vangelis Vassiliadis - I could work on heuristics to auto-generate links between data items from different sources and on adding domain knowledge to different data sets by means of OWL.

Dmitry Ulanov - I'm a developer of THALIA testbed. It can be used for benchmarking relational database to RDF mapping tools.

Georgi Kobilarov - I maintain the DBpedia extraction framework. I'm interested in developing tools to help data publishers interlink their databases and in building UIs for end-users .

Huajun Chen - developer of DartGrid which is a relational data integration toolkit using semantic web technologies. Two major components of DartGrid are a visulized semantic mapping tool and a view-based(or more generally rule-based) SPARQL-SQL query rewriting component. ISWC2006 Paper introduces the details.

Chris Wilper - I'm a developer of Fedora and also work with the National Science Digital Library group at Cornell. I'm interested in the collaborative development of OLTP benchmarks for triplestores.

Giovanni Tummarello - I created Sindice, together with Eyal Oren. Sindice is a linked data search engine which returns ranked lists of "SeeAlso" URLs which contain information about a given URI. In a sense it overcomes the problem of the need of the mandatory "SeeAlso" statements by looking anywhere on the web (via people providing direct Ping either to ourself or to PTSW and via our array of swse bots). "SeeAlso" statemens remain useful however for ranking purposes. Service has a simple http API, see for example all the links Sindice knows which talk about Tim Berners-Lee here.

Sherman D. Monroe - I'm the author of Cypher, which is a transcoder with generates the RDF and SeRQL (working on SPARQL port) representation of natural language phrases and sentences. The project page can be found here. The Cypher project aims to collect and unify the various sources of data used for NLP tasks, such as WordNet, FrameNet, PropBank, as well as annotated corpora, and also to provide standard ontologies for things like part-of-speech tagging. We wish to provide a single resource which NLP applications can use to leverage this data. I'm also working on a Semantic Web web service called overdogg (currently in alpha) which is a new type of marketplace based on reverse auction for services, and another soon to be announced service which builds FOAF databases of users.

Adam Sobieski - Interested in event ontology and also wikitology. I'm making a website that allows users to select or create predicates and drag and drop nouns (noun phrases) from sentences into predicate slots. The interface will capture pronoun resolution and semantics from visitors reading. The sentences will be viewed in order from articles to obtain context information. The downloadable resource will be both a corpus (hopefully as useful as Penn treebank and Redwoods) and a collaborative ontology relating nouns from real-world encyclopedic articles.

Fabian M. Suchanek and Gjergji Kasneci - We provide YAGO, a large ontology. YAGO is available for querying and for download in different formats (RDFS, XML, database, text).

Troy Self - I maintain SemWebCentral, which is a development site for Open Source Semantic Web tools. I also maintain the RDF browser, ObjectViewer, the ontology summarizer, Ocelot, and was one of the primary developers of the Semantic Web Development Environment, SWeDE.

Bernhard Haslhofer - I am working on Semantic Web topics in the Digital Library and Archives domain. An easy way to link hundreds of data sources would be to build a wrapper for the Open Archives Protocol for Metadata Harvesting (OAI-PMH). I intend to get some work done in this direction.

David Peterson - I am working on getting large Australian science data sets converted to RDF and accessible via SPARQL. We work with over 13 large and diverse science organisations so I believe this will be a valuable contribution.

Joerg Diederich - I am working on Semantic Web topics and Digital Libraries and I am the maintainer of FacetedDBLP. I am planning to contribute my local DBLP data (updated weekly) by means of the D2R technology from FUBerlin very soon.

Danny Gagne -- I think this is a great idea. I'm going to work on building some tools, trying to create a small dataset, who knows what else :)

MichaelHausenblas -- I joined the LOD community project in June 2007 after Chris Bizer has told me about this great idea. In the meantime quite some things emerged and I think I have left some traces ;) In the beginning I was interested in building a linked dataset, which actually yielded riese, the RDFised and interlinked version of the Eurostat's statistical data. As a by-product we developed a pradigm allowing for manual interlinking, called UCI (User-Contributed Interlinking). Then my focus shifted and I wanted to build applications on top of linked data which triggered the creation of voiD, the 'Vocabulary of Interlinked Datasets'. Now, as I'm a multimedia guy at heart, I also started to apply linked data principles to multimedia content. Finally, I gave a LOD tutorial at ISWC08 with Tom, Chris, Richard and Olaf (see also my quick intro into linked data). I've participated in (and co-organised) several LOD Gatherings so far and plan to do so in the future.

Jonathan Gray -- I'm from the Open Knowledge Foundation and I'm interested in open data licensing and locating and listing open data sets.

Bernhard Schandl -- I am working on integration of semantic data into user desktops and file systems and am interested in the possibilities of publishing such data on the web.

David Huynh -- I'm interested in building UIs for browsing and viewing the collected data. I don't think that a SPARQL interface appeals to the general public, and a pure search text box a la Google takes sufficient advantage of the graph nature of the data.

Daniel Lewis -- I am a Technology Evangelist for OpenLink Software, my interests are in making the Social Web more Semantic, making the Semantic Web more User Friendly and making the web more intelligent. The only way to advance web applications is to expose and link data between domains - Semantic Web technology can do that. My blog is available here and I tend to talk about various subjects (not just about the Semantic Web).

Andreas Langegger -- I'm currently developing a middleware for virtual data integration based on SW technologies. It will be used inside the Austrian Grid for sharing structured scientific data but because of the relevance for the SW community, it will be released as SemWIQ (Semantic Web Integrator and Query Engine) in mid-2008. Among other goals, I try to keep setup / configuration as simple as possible. Stay tuned (currently optimization is on the top of the agenda)...

Sergey Chernyshev -- I'm running TechPresentations.org project based on Semantic MediaWiki (SMW) technologies and participating in a development community around SMW. Among my goals is to connect TechPresentations data to LOD dataset and to make use of SMW-related technologies to help crowd-sourced data get interconnected with other LOD data sets.

Rob Cakebread -- I run Doapspace.org and am mainly interested in DOAP and linking it with FOAF, BEATLe, SIOC. I'm a Gentoo Linux developer and I'm working on tools for users and package maintainers to benefit from the metadata provided by DOAP and related ontologies.

Francois Scharffe -- I'm a researcher at STI Innsbruck in the area ontology alignment. I'm involved in the EASAIER project where we publish music related data sets using the music ontology. I've worked on SPARQL++, a SPARQL extension allowing to transfer RDF data from one ontology to another. I'm interested in data fusion techniques. I'm also interested creating a classical music reference knowledge base that could be used as an anchor to publish classical music data sets.

Oktie Hassanzadeh -- I'm a graduate research assistant at the Department of Computer Science, University of Toronto and a member of the Database Group. Together with Mariano Consens, we developed the Linked Movie DataBase (LinkedMDB), that aims at publishing the first open linked data dedicated to movies. LinkedMDB showcases the capabilities of "ODDLinker", a tool under development, that employs state-of-the-art similarity join techniques for finding links between different data sources.

Daniel Schwabe -- I'm a professor at Department of Informatics, Catholic University of Rio de Janeiro, where I head the TecWeb Laboratory. I work on design methods for (semantic) web applications seen as part of men-machine teams. We have developed Semantic Wiki authoring enviroments, and I am also trying to link the Portinari Project data into the LoD cloud. We are also working on direct manipulation interfaces that allow users to explore RDF data sets, including querying, without requiring any knowledge of query languages.

Andraz Tori -- I'm CTO at Zemanta. I work on architecting Zemanta's engine to disambiguate to Linking Open Data entities. Would like to see which parts of LOD are candidates for inclusion into Zemanta and get the feedback of what the API needs to return to be most useful for enabling LOD mashups.

Ted Thibodeau Jr - I've been with OpenLink Software since December 2000, working with Data Access and all that entails, including the Linking Open Data project, many aspects of the Virtuoso Universal Server, exposing more-and-less structured data (from RDBMS content to plaintext) as RDF, dreaming up new wish lists for Linked Data features and applications, connecting people and projects, and more. My dream includes realization of the Knowledge Navigator concept, complete with unrolled and/or unfolded screen, voice commands, intelligent agents, etc. Before joining OpenLink, I spent time serving various roles in various industries, all of which would have benefited from the LOD project, and those experiences guide my efforts today.

Andreas Harth -- I'm a researcher at DERI Galway, working on integrating web data without making assumptions on the schema used. I currently crawl parts of the LOD (at least weekly) and provide search, browsing and navigation functionality over that LOD data in VisiNav. The site is useful for the LOD crowd as it allows people to inspect LOD data (including provenance tracking) in case there are hickups in the data published in the LOD cloud.

Hydrasi -- I'm JohnMetta, a scientist, 20+ year Open Source Software developer, and founder at Hydrasi. Hydrasi is a company focused on the water and climate sciences and we are planning on developing a non-profit foundation, the Climate Cloud Initiative, as an open data network of water and climate data. I'm happy to see such a diverse and active crowd in open data-- from so many fields.

TobyInkster - I'm Toby Inkster. Not sure why I've added myself to this list so late. Without exaggeration, I think Linked Data could play a part in solving some of the biggest problems of the 21st century. We can't really in advance which information will be turn out to be needed to save the world - the early scientists who caught lightning with kites could never have known that electricity and the things electronics have made possible would be so important in the modern age - so it's key to link together as much data as possible.

Mariano Rico -- I developed VPOET, a web application oriented to create presentation templates for handling semantic data easily. As an example, click here to browse TBL's FOAF profile under a given template. These templates can be used also by web developers through HTTP messages, and end-users can use these templates by using the Google Gadget GG-VPOET. You are welcome to create your own templates, reuse other's templates, or create your own templates repository (code available). I am enthusiastic with LOD and I think that semantic templates can contribute to reduce the adoption barrier of semantic data for common web developers and end-users.

How To Join the Project

To join the project, please do the following:

- Sign up to the mailing list.

- Send a little self introduction to the mailing list (include an intro to your project and associated RDF Data Sets where such exist or are planned).

- Register at [2], which automatically gives you a WebID (an ID for you, the Person Entity, e.g., WebID for Kingsley Idehen, an Entity of Type: Person) and an OpenID URL. Use the Profile page to Link to your other URIs (if such exist) via the "Synonyms" input field.

- Create a Wikiword (Topic) for yourself (e.g., Kingsley Idehen) and expose one of your Person Entity URIs there.

- Add your Wikiword to this page.

- Add Project References to section above.

- Add Data Set References to the RDF Data Sets page. Please also add associated RDF Dumps, SPARQL Endpoints, and other relevant pointers to the assorted sub-pages.