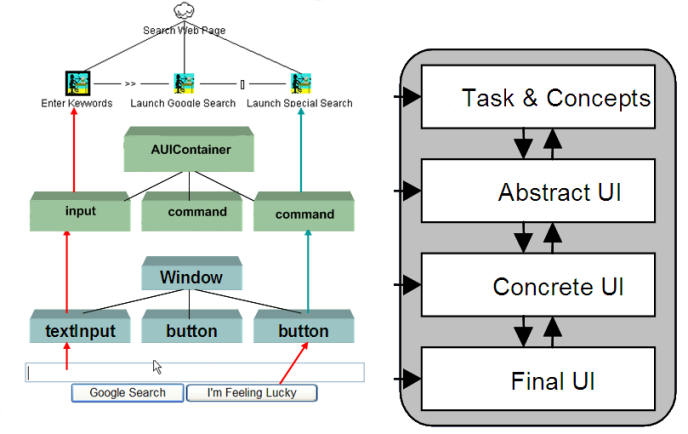

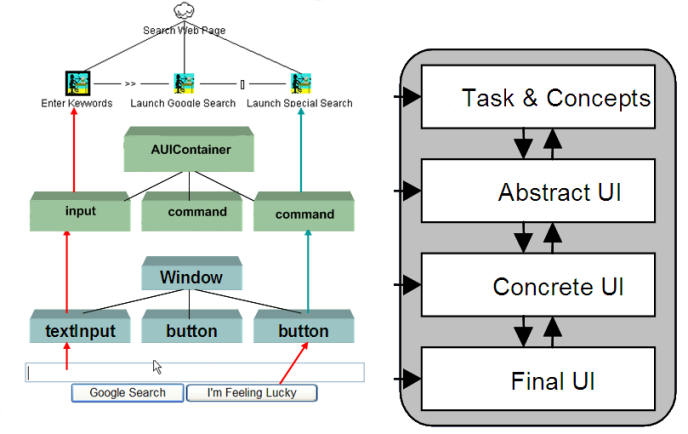

Illustration of the CAMELEON Reference Framework

Further information can be found in the W3C Model-Based UI Incubator Group Report.

This describes work on developing a practical understanding of how to create automated design assistants for model-based user interface design. This will be prototyped as part of the "Quill" browser based editor for the EU FP7 Serenoa Project.

When people are asked to design user interfaces for some purpose by a project manager, the business modeller will identify the requirements the user interface needs to fulfil to satisfy the business goals. The data modeller takes these and constructs a detailed data model. The user interface expert is then able to sketch a user interface, and to work with an artwork designer to mock up the proposed design. In a small business, a single person may play multiple of these roles. A concrete user interface is generally easier for the manager to understand than more abstract representations, but when it comes to deploying a user interface across different target platforms, having an explicit shared abstract user interface can help to ensure that the concrete user interfaces for each target platform are kept in synchronization. The idea of abstract and concrete user interfaces is explained in the following figure:

Illustration of the CAMELEON Reference Framework

Further information can be found in the W3C Model-Based UI Incubator Group Report.

An automated design assistant can remove the effort needed for creating the abstract UI and keeping it synchronized with the concrete UIs for each target platform, and with the evolving domain model. If the UI designer changes the abstract UI, the design assistant can propose changes to the concrete UIs. Likewise, if the data modeller updates the domain model, the design assistant can in turn update the abstract and concrete UIs to match, and invite the UI designer to review the changes. If the UI designer updates one of the concrete UIs, the design assistant can work out what changes are needed to the abstract UI, and then work out what changes are needed to the other concrete UIs.

How can a design assistant apply design preferences whilst remaining consistent with the choices already made by the human designer? The assistant needs to be able to propagate implications from the domain model to the AUI, from the AUI to the CUI, and the CUI to the AUI and domain model. If the assistant can't find a solution, the human designer needs to be pointed to the problem and asked to resolve it. Most likely that will involve relaxing the constraints.

The existing models and the relationships that are known to hold between them can together be used to infer updates to the models, for example, if the concrete UI model includes a button, then this implies an action in the abstract UI since each button must be associated with an AUI action. This kind of reasoning is generally referred to as abduction. As a starting point for understanding how this works, a testbed has been developed for conjunctive relationships between relational data:

This testbed infers the implied data records in two passes, allowing information to propagate from left to right and right to left over the conjunction of terms appearing in the relationship. This is an over simplified approach, but was a valuable step towards a more general account.

The potential relationships can be one to many, for example, an AUI selection could map to a drop-down list or a set of radio buttons. However, only one solution is needed in a particular realization of the AUI. The preferred solution may depend on the context and this can be dealt with by associating relationships with preconditions that determine when the relationship is applicable. If the human designer disagrees with the choice made by the design assistant, the authoring tool should make it easy to switch to another choice, and for the design assistant to work through the implications.

The inference process needs to take into account the hierarchical structure of user interface models. Roughly speaking, the inference process needs to support top down and bottom up propagation of implications, using unification over hierarchical data structures. The details of this remain unclear, and it is hoped that by working through some examples, it will be come clearer to that point that an experiment implementation is possible as a basis for testing the ideas.

This is an exploration of UI examples found on the Web. A good source is the compendium of UI design tools, e.g. ForeUI and the mockflow wireframe examples. The aim here is to try to represent the essence of the examples in terms of the Cameleon reference framework, and to then look at how the CUI could be derived from the AUI or vice versa, and finally, mixed scenarios where users have defined partial models at both the AUI and CUI levels, and we want to see what kind of reasoning can be applied to infer parts of the AUI and CUI models implied by the things the user has already defined, and the relationship rules that apply between the AUI and CUI. Once that is understood, it should then be possible to extend the reasoner to include the domain and task models at the top level of the Cameleon reference framework..

Note that I am not adhering to the Serenoa ASFE-DL meta model as the aim here is to explore the kinds of reasoning needed for synchronizing AUI and CUI models, and the precise meta-model isn't critical to that aim. Too much detail will just get in the way of gaining insights into the inference mechanisms needed to provide an automated design assistant. These details can be added back via successive evolutionary steps, starting from a basic solution.

■ Group with title (window)

* Group with title (tab)

- input (text)

- numeric range

- selection

- action

- selection

- input (boolean)

- discrete range

* Group with title (tab)

...

A range is ordered, but a selection doesn't need to be. A discrete range is a sequence of names, and can be contrasted with a numeric range where the sequence is a bounded set of real or integer values.

■ Window with title

contains

■ Tab collection

contains

■ Tab with title

contains

■ vertical group

* text input

* horizontal group

- numeric input with spin control

- combo-box list selection

- button

* horizontal group

- radio button 1

- radio button 2

* horizontal group

- check box

- slider

■ group with title * account info (a data model with named fields and data types) * agreement to terms of service (with reference to description) * proof that user is a human being (with challenge) * action (to register)

The inputs are passed to a register method in the domain model. The captchar control has a visual representation as an image, an aural representation as a sound clip, and a hidden challenge that will be used to verify the value provided by the user as text or as a sound clip. It should be possible to import pre-built components at the AUI level. In this case, there is a one to one relationship with the corresponding CUI component (for the given target device).

■ Dialog with title

contains

■ vertical group

* grid group

- row set bound to list

- label

- text input

- row

- empty

- checkbox with rich text label

* captcha component (imported pre-built component)

* button bound to a method in the domain model

A grid is a group of rows of cells. The cells can span one or more rows and columns as needed. The row set saves effort by binding to a set of properties in a record type in the domain model. You need to define a sequence of controls and binding this to the data model. The instances of the data records then correspond to the rows in the grid.

■ group with title (print)

contains

■ group with title (general)

...

■ group with title (options)

■ group with title (select printer)

* action (add printer)

* action set (bound to set of printers in domain model)

* input (boolean, "print to file")

* action preferences

* action find printer

■ group with title (print range)

* selection (all, current page, pages)

* number (number of pages)

■ group without title

* numeric range (number of copies)

* boolean (collate)

■ group

* action (print)

* action (cancel)

* action (apply)

Note that the numeric field number of pages is disabled unless the user selects "pages". This can be modeled by setting the enabled property to a boolean expression, or by binding it directly to a particular choice.

■ Dialog with title

contains

■ vertical group

■ Tab collection

contains

■ Tab with title ("Options")

contains

■ vertical group

* captioned group ("select printer")

- vertical group

- sequence (icon, printer)

- horizontal group (right aligned)

- check box ("print to file")

- button

- button

* horizontal group

- captioned group ("print range")

- vertical group

- radio button (all)

- radio button (current page)

- horizontal group

- radio button (pages)

- numeric input

* horizontal group (right aligned)

- button ("Print")

- button ("Cancel")

- button ("Apply", disabled)

The last group is part of a vertical group which starts with the tab collection and finishes with the buttons common to all tabs.

The abstract UI addresses three aims:

■ group with title (facebook)

contains

* output (richtext -- "Facebook helps ..."

* output (image -- facebook branding)

■ group with title (login)

* input (text -- email address or phone)

* input (text -- password)

* input (boolean)

* action (forgotten password)

* action login

■ group with title (signup)

* input (text -- first name)

* input (text -- surname1)

* input (text -- surname2)

* input (text -- email1)

* input (text -- email2)

* input (text -- password)

* input (date -- birthdate)

* input (enumeration [female, mail])

* output (rich text -- links to why we need birthdate)

* output (rich text -- links to policies)

* action (sign up)

* output (rich text -- link to create page for celebrity, band or business)

■ Page with title

contains

■ vertical group

contains

■ horizontal group

* heading

■ grid group

* row

- text (email or phone)

- text (password)

- blank

* row

- text input (email or phone)

- text input (password)

- button (log in)

* row

- checkbox (keep me logged in)

- link (forgotten your password)

- blank

■ horizontal group

■ vertical group

* text "facebook helps ..."

* image

■ vertical group

* heading

* subheading

* text input (first name)

* text input (surname)

* text input (email1)

* text input (email2)

* text input (password)

* date (birthdate)

* rich text - link for why birthdate is needed

* radio button (female)

* radio button (male)

* rich text - links to policy

* button (sign up)

* rich text - link to create special page

When generating a concrete user interface design from an abstract user interface, the AUI groups have to be mapped to CUI containers, and a choice made for the layout. ASFE-DL uses CSS for layout, and as such is very flexible. However, too much flexibility will make it hard to create automated design assistants.

A grid is used for alignment on content for aesthetically pleasing layouts. Grids can be used for conventional tables. The following figure illustrates the idea and is taken from the CSS grid positioning module:

I don't yet adequately understand how grid layout works in practice, but note that there needs to be a simple way to bind data tables to grids. Automated design assistants need to be able to layout a concrete user interface according to aesthetic design preferences, taking into account the relative importance of different content, and their interrelationships. This won't be easy for grids, and hence it is best deferred until simpler problems have been addressed.

A design assistant will need to evolve from a very simple starting point. The simplest approach is to ignore layout altogether and just position each container and each control sequentially (e.g. vertically). The next step would be to associated containers with horizontal or vertical layout policies.

UI controls have an innate size that is related to the default font size, and to the length of the caption. A simplication is to assume that the caption is a single line. Text input and text area controls will have some indication of the size they need to be. Larger controls will help to determine the layout - do they need to extend all the way across the window, or does it make sense to split into columns? Can the smaller controls be fitted horizontally in ways that make sense? The relative importance and the relationships between groups can also be used as a guide, e.g. controls for related tasks should be placed near to each other. One question is how to decide between alternative CUI solutions for a given AUI control. A drop down list is good for a child menu, and for selections when space is short. If there are too many choices or space is plentiful then radio buttons make sense.

User interfaces need to be layed out in time as well as space. What things make sense to show together and what should be shown later? This can be influenced by a distinction between everyday and rare tasks, or tasks only for advanced users. There are lots of possibilities: expanding/collapsing controls, tabbed panes, one pane replacing another, animated transitions, specialized controls, e.g. for galleries or file selection.

When engineering problems are found to be hard, the general approach is to split the problems into a number of smaller ones. In this case we need a way to delegate layout decisions to a layout module that acts as a black box with a clean interface, so that we can work on addressing the logical relationships without having to address layout at the same time. The choice of which kind of CUI control to use may need to be delegated to the layout module. One possibility is to first choose the controls based only upon generic considerations, e.g. the number of choices for a selection, and to then try to find a reasonable layout, and in the process to revisit earlier choices as appropriate. This raises the idea of support for backtracking where earlier decisions can be undone and remade differently. Alternative, there could be a trial phase where different choices are evaluated, and a commit phase based upon the assessments made in the earlier phase.

The domain and task models are the top layer in the Cameleon reference framework. In principle, the business modeller will identify the requirements for the data and tasks. The data modeller will then come up with a detailed domain model for review. The domain model consists of properties, methods and events. The domain and task models can be associated with annotations.

The domain and task model provide information that can reused by the AUI and CUI, and taken into account when laying out the CUI.

In principle, this is easier than the other way around as we don't need to make complex choices over such things as layout. CUI controls map unambigously to AUI controls. CUI containers that have a functional purpose map to AUI groups, but CUI containers that are only there for layout purposes can be ignored. A functional CUI control needs to be bound to properties or methods in the domain model, or to scripts used for event handlers. Each group or control may have short and long text descriptions where the associated information in the domain/task model is inadequate.

This essentially a matter of mapping the AUI groups and interactors to CUI containers and controls according to the context, and making use of the layout module for layout decisions. This may involve revising earlier tentative decisions as the layout module needs to change the choice of CUI controls to provide a better layout.

When the human designer updates the user interface, the design assistant is expected to propagate the implications of the update throughout all of the models at all levels in the Cameleon reference framework. Some changes might be trivial such as changing one of the options in a selection. Other changes might have knock on effects causing multiple changes. Adding further options to a selection may result in a switch from radio buttons to a drop down list and adjustments to the layout.

Early on, I expected this could be handled in terms of the event-condition-action rule pattern. However, further study suggested that the number of such rules would rapidly get out of hand, and that a more principled approach is needed. One approach under consideration is to identify which decisions were made by the human designer, discard all the others, and to resynchronize the models using abduction. If that proves too costly, then a more incremental approach will be needed that uses a record of dependencies to only revisit the minimum of previous decisions by the design assistant.

The abduction approach would work top down from the AUI to the CUI to deal with implications due to user updates to the AUI, and then from the CUI to the AUI to deal with implications due to user updates to the CUI. This would employ unification with matching of hiearchical structures, binding of variables and inferring missing models based upon known relationships. This involves searching through cross products of terms (analogous to joins in relational databases), and could scale poorly with the number of model instances. I suspect that practical examples of UI designs won't suffer from the combinatorial explosion, but this needs to be tested.

It isn't clear at this point whether this will need a backtracking mechanism, such as the chronological backtracking technique used in the Prolog language, or perhaps some form of dependency based backtracking. Note this can also be related to work on constraint satisfaction and explanation based learning. A promising idea (constraint propagation) is to combine search with consistency checking to avoid late detection of inconsistencies.

We need a way to define models for use in test cases, and a corresponding internal representation that is convenient for writing the algorithms. The XML representation for ASFE-DL is very verbose and the XML DOM would be a poor match for the kinds of reasoning expected. One idea is JSON as this is trival to map into an object structure, another is to define a simple text format, as parsing is easy.

Note that for the purpose of developing a design assistant, we can skip over details that are unimportant to the reasoner. This includes the details for enumerations and action handlers and event handlers, etc. This would be included when integrating the reasoner into the user interface authoring tool, along with richer descriptions of components and a full treatment of events.

Here is a possible JSON representation of the AUI model for the first example:

{

"class" : "group",

"name" : "demo",

"content": [

{

"class" : "group",

"name" : "tab1",

"content" : [

{

"class" : "input",

"name" : "text1"

"type" : "text",

},

{

"class" : "range",

"name" : "range1",

"type" : "integer",

"min" : 0,

"max" : 1024

},

{

"class" : "selection",

"name" : "selection1",

"type" : "enumeration",

"options" : [

"choice1",

"choice2",

"choice3"

]

},

{

"class" : "action",

"name" : "action11",

"handler" : "method call details"

},

{

"class" : "selection",

"name" : "selection2",

"type" : "enumeration",

"options" : [

"choice1",

"choice2"

]

},

{

"class" : "option",

"name" : "option1",

"type" : "boolean",

"default" : false

},

{

"class" : "range",

"name" : "range2",

"type" : "sequence",

"options" : [

"yes",

"unsure",

"no"

]

}

]

},

{

"class" : "group",

"name" : "tab2",

...

},

...

]

}

JSON is very flexible when it comes to optional properties for objects. If there is a fixed set of properties, a more concise representation is possible, e.g.

group demo

{

group tab1

{

input text1 text;

range range1 integer 0 1024;

selection selection1 enumeration

[ "choice1", "choice2", "choice3];

action action1 "method call details";

selection selection2 enumeration [ "choice1", "choice2"];

range range2 sequence ["yes", "unsure", "no"];

}

group tab2

{

}

...

}

A space separated list of arguments is easy to process where the first argument is used to select the method to be called to process the rest. If appropriate, JSON could be used for arguments where extra flexibility is needed. This representation is a pain to embed in scripts since you need special treatment for text strings that span multiple lines. This can be avoided by embedding the definition in the web page, e.g. with a PRE element, or in a separate text file that is loaded by the script using HTTP.