The Semantic Web and its applications at W3C

Dominique Hazaël-Massieux

W3C Team (Aix-en-Provence)

SIMO - Madrid

slides: http://www.w3.org/2003/Talks/simo-semwebapp/

Overview

- Semantic Web Technologies

- Examples of Semantic Web Applications in W3C Work

Semantic Web Technologies (1)

Goal: Adding a Web of data to the Web of documents

Foundation: RDF (Resource Description Framework) allows to state anything about anything

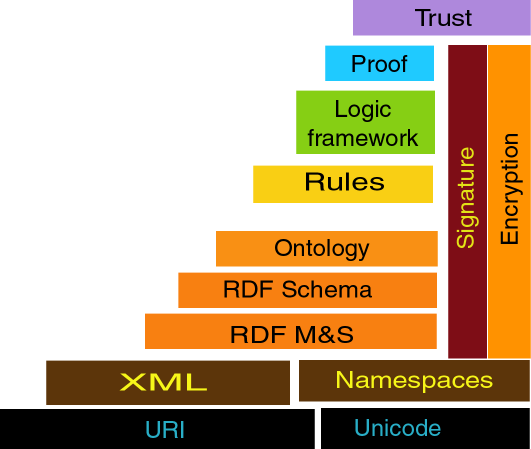

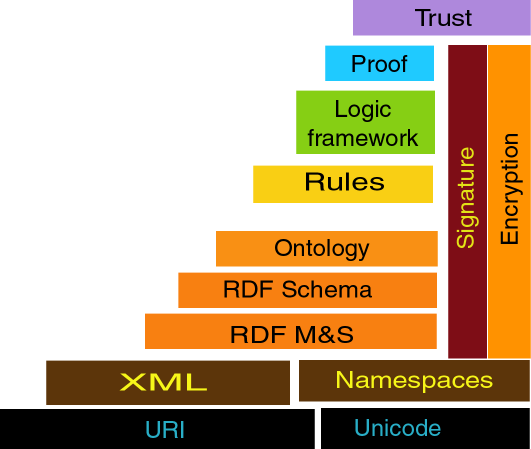

Semantic Web Technologies (2)

The layers of Semantic Web Technologies

So what?

- RDF is XML → Internationalization, XSLT (XML transformation language) and other common tools

- RDF is perfect for merging data from various sources, and for mixing namespaces

- RDF is for the Web → easier to share, to protect, to retrieve

- a consistant stack of technologies → better integration

- using URIs → network effect

Not tomorrow, today

These benefits are NOT theoretical...

Not everything is standardized yet, but the prototypes are promizing

→ use of Semantic Web Technologies integrated in W3C Work

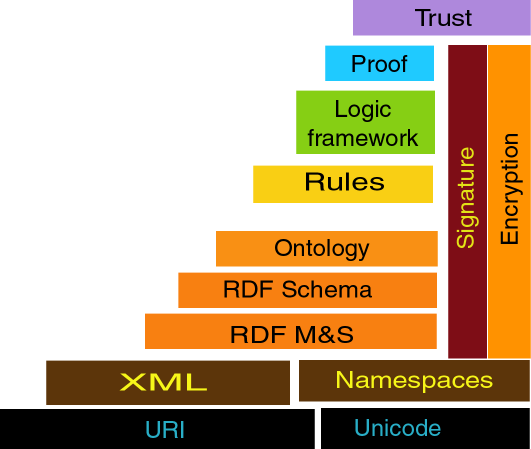

The layers of Semantic Web Technologies: what's standard, being standardized, experimental

W3C Process and Deliverables

- Main W3C Deliverables are its Technical Reports (TR)

- produced following an adopted process

- in a quite decentralized way

- TR page: more than 400 referenced documents, produced by more than 500 editors

- maintained by hand until Nov. 2002 (I know, I used to do it!)

- now managed with Semantic Web technologies

TR automation (2)

- W3C Specification must conform to W3C Publication Rules:

- → common format between W3C Specifications

- common set of metadata (title, date, editors list, status of the publication, ...)

- metadata manually reported in the list of Technical Reports (before)

TR automation (3)

But now:

TR automation (4)

Modeling the process as Rules

→ a completely formalized digital library

(in fact, it is a process a bit more complex)

Benefits of TR automation

Webmaster's point of view:

- less human manipulations → less errors

- much more efficient (publication rate grows steadily)

- new views of the TR page (by editor, by date, by title, by W3C Activity) possible for free! (thanks XSLT)

But even better!

The data gets reused and completed all over the place!

A basis for other works

- The QA Matrix now uses this as a basis for its data:

- only maintains what's relevant (validators, test suites, ...)

- gets automated updates from the TR in RDF

- Translations at W3C

- only maintains what's relevant (translations URIs, translators, ...)

- provide various views (by Technology, by Language)

- other sites re-use our digital library as a basis for other works

Related to...

It's actually all about integration

Integration of Web Technologies:

- XML → Internationalization (e.g. in Translations)

- XSLT gives us all the power on our data

- RDF/S brings modeling of real entities, in a self-describing way

- XHTML can be used as output and even as input (through social/technical conventions)

- SVG (vectorial graphics) makes the output even more powerful

It's all about integration (2)

Integration of tools:

- CWM and RDFLib

- Any (reasonably compliant) XSLT processor

Decentralized data management

I'm presenting this work, but I have built only a small piece of it... Data are managed:

- by the Webmaster (list of TRs)

- by the Quality Assurance Team (Matrix data)

- by the Translations Management Team (Translations list)

- by the Communication Team (structural data about W3C)

- by the Working Group Chairs (detailed info about WG)

In different formats: RDF/XML, Notation3, (X)HTML

Decentralized data manipulation

(credits go to)

- Ryan Lee

- Ivan Herman

- Dan Connolly

- (myself)

The fact that the data are on the Web (using URIs) allows to deal with data from various origins without trouble (network effect)

Upcoming uses

- reports of discrepancies in the data

- graphical navigation in W3C site (?), in TR page (in progress)

- more statistical analysis of our work

- more automation of our processes

Comparisons with traditional solutions

- Modeling, Business/Processing Rules are not "lost" in the code

→ declarative approach more re-usable, easier to adapt

- HTTP is the most ubiquitous protocol

→ easy re-use of available tools and technologies (e.g. access control)

- integrated techonologies from top to bottom and open standards (cf. XML success)

- highly decentralizable (cf. Web success :)

Thanks for your attention

Your questions are welcome!

References

More details: